FluxGym! Train FLUX LoRAs locally on limited VRAM

All right, now we're cooking with gas! There is still hope for those of us less fortunate, who chickened out of selling a kidney to purchase a GPU with 24GB of VRAM to unleash full power of training FLUX LoRAs locally!

Training them has been tough for users with limited VRAM. It usually needs a lot of power, with many solutions requiring at least 24GB of VRAM. This makes it hard for those with lower setups to train their models. The high hardware needs keep many from using advanced machine-learning techniques.

Some WebUIs make it easier but still need a lot of VRAM. Meanwhile, scripts for training FLUX LoRAs are more powerful but require working in a terminal, which isn’t ideal for users who want a simpler experience. This leaves a gap—either users deal with high hardware demands or tackle a steep learning curve. Which is why many turned to platforms like civitai.com for their training tasks. But a lot of people wanting to train a LoRA on their own or family pictures didn't feel comfortable uploading a whole bunch of their personal photos online.

Fluxgym: Simple Flux LoRA Training Web UI for Low VRAM

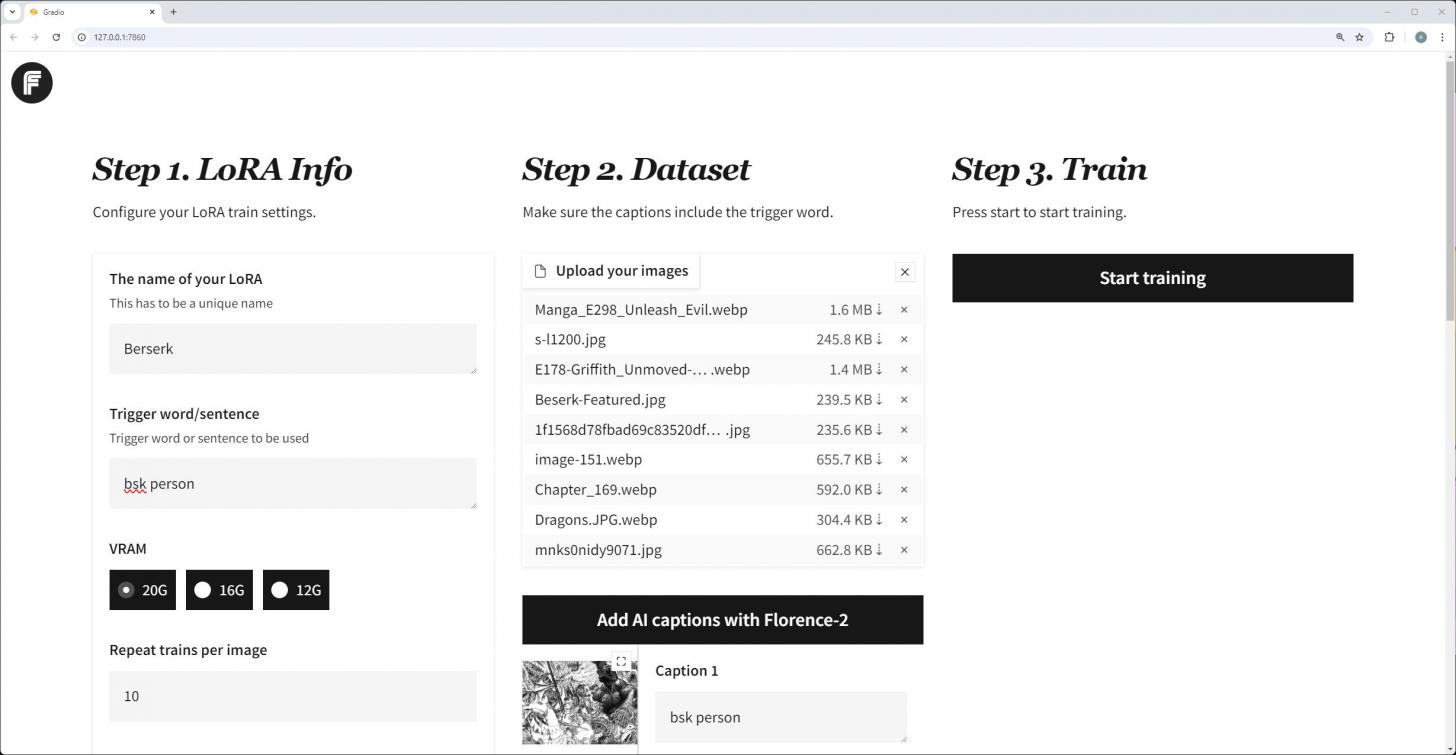

Enter FluxGym, a new tool that solves this problem. FluxGym mixes the ease of a WebUI with the flexibility of terminal-based scripts. This allows users to train FLUX LoRAs on machines with as little as 12GB, 16GB, or 20GB of VRAM.

An example of FluxGym’s performance: training a LoRA on a 20GB VRAM machine takes about 20 minutes with 1200 steps. It also resizes images automatically to make training faster and smoother, even on limited resources. For those who want more control, the tool offers advanced settings to tweak the training process.

And with the latest update form the developer, theoretically you can even run Fluxgym on: 16GB VRAM - 12GB VRAM - 8GB VRAM (!!!)

Here's the GitHub https://github.com/cocktailpeanut/fluxgym

The frontend uses a Gradio-based WebUI, known for its user-friendliness, while the backend leverages Kohya Scripts, which are efficient and great for low VRAM setups. This combo lets users train models on their local machines without needing super powerful hardware.

In short, FluxGym gives users with low VRAM machines the power to train FLUX LoRAs. By combining a friendly WebUI with strong backend scripts, it makes machine learning more accessible without requiring high-end hardware.

No doubt I'll be testing this software once my modest 16GB card arraives. Push comes to shove, I'm no stranger working through Linux terminal alone, but nice to have a GUI. But I'm quite optimistic.

[ Hey' I've recently tried a quantified FLUX on an 8GB RAM CPU, haha, and well, it does run, kind of. ]

Community Feedback

This redditor shares how he previously trained a LoRa model using his own images, but the results were consistently uninteresting, with the model producing the same facial expressions and clothing regardless of the prompts.

In a recent attempt, he reduced the number of training photos from 17 to 7 and found the outcomes to be significantly improved. The training was conducted using the default settings of FluxGym on an RTX 4090 for approximately 3.5 hours.

He noted that FluxGym, when combined with Pinokio, offers a straightforward one-click installation process. The user expressed gratitude that the software operates locally and is free, unlike some other paid solutions.

Read the earliest community feedback about the software on reddit https://www.reddit.com/r/StableDiffusion/comments/1faj88q/fluxgym_dead_simple_flux_lora_training_web_ui_for/

Sources:

https://x.com/cocktailpeanut/status/1832084951115972653

Update, 02 October 2024

After having set up my new system I rushed to try out Fluxgym, but sadly I couldn't finish training my LoRA due to a SIGKILL. I tried only a few images with like 7 epochs and with 12GB setting (I have 16GB VRAM, tried that setting too). Skill issue?

I was able to run quantized FLUX models in ComfyUI successfully though so at least my system is good for that. But whether training will be feasible on it remains a big question.

Update, 03 October 2024

Ok, so I have replaced the original model (unet) and clip with fp8 versions of Schnell. That's 12Gb vs 24GB, so much better. And now I'm getting some results. You can't use GGUF for training but at least lowering down precision by choosing fp8 versions is available. So will keep trying and keep you posted with my results.

Have you been able to run FluxGYM on low VRAM? Share in the comments!

Last modified 28 October 2024 at 20:25

Published: Sep 10, 2024 at 10:41 AM

Related Posts

FLUX Selfie Prompts

4 Sep 2024

Moody, Dark & Gloomy Image Prompts

28 Aug 2024