CPU Only FLUX Schnell on Linux (GGUF)

Ok, I just had to try this, even though I knew my hardware was not made for tasks of this nature at all. I don't play games on this machine and for any of my tasks my Intel NUC was a crazy fast horse, quiet too. But now that's generative AI is coming I'll have to upgrade to an Nvidia GPU system with CUDA support for best results.

But while I'm deciding on all the parts and suppliers and my next Linux distros, I've decided to torture my little old integrated graphics mini PC by making it run Comfy UI with FLUX Schnell in CPU-only mode. If only just to know what the process is and how Comfy UI looks from the inside.

I obviously can not recommend this set up (no GPU), but someone might have a powerful processor and tons of RAM, or some people might find this useful for installing on Linux with GPU, because process is almost identical, actually simple on a GPU system, I've had to google and chat with ChatGPT (which was mostly useless) to find out how to set it up without a graphics card.

-

I've used this guide to install Comfy UI first. https://medium.com/@yushantripleseven/installing-comfyui-linux-windows-b59a57af61b6

Except, since I've no GPU and the

pip install torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/cu121Was downloading ginormous quantities of NVIDIA related stuff, I've actually found an older release of pytorch and used

# CPU only

pip install torch==2.3.1 torchvision==0.18.1 torchaudio==2.3.1 --index-url https://download.pytorch.org/whl/cpu -

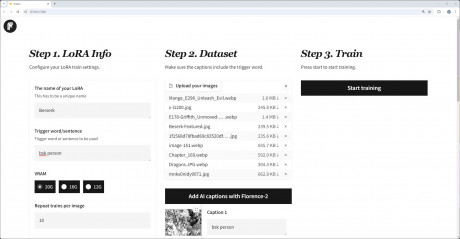

Then, I've installed this glorious ComfyUI-GGUF node https://github.com/city96/ComfyUI-GGUF GGUF is a quantized model format that reduces the size of models, making them suitable for low VRAM GPUs. You can read more in this Reddit post about quants https://www.reddit.com/r/StableDiffusion/comments/1eslcg0/excuse_me_gguf_quants_are_possible_on_flux_now/

-

Then, concerning FLUX, I've also relied on this video tutorial, even though it's for Windows, but directory structure is the same so just to know where each file belongs within the Comfy UI https://www.youtube.com/watch?v=nncY3dJLV78

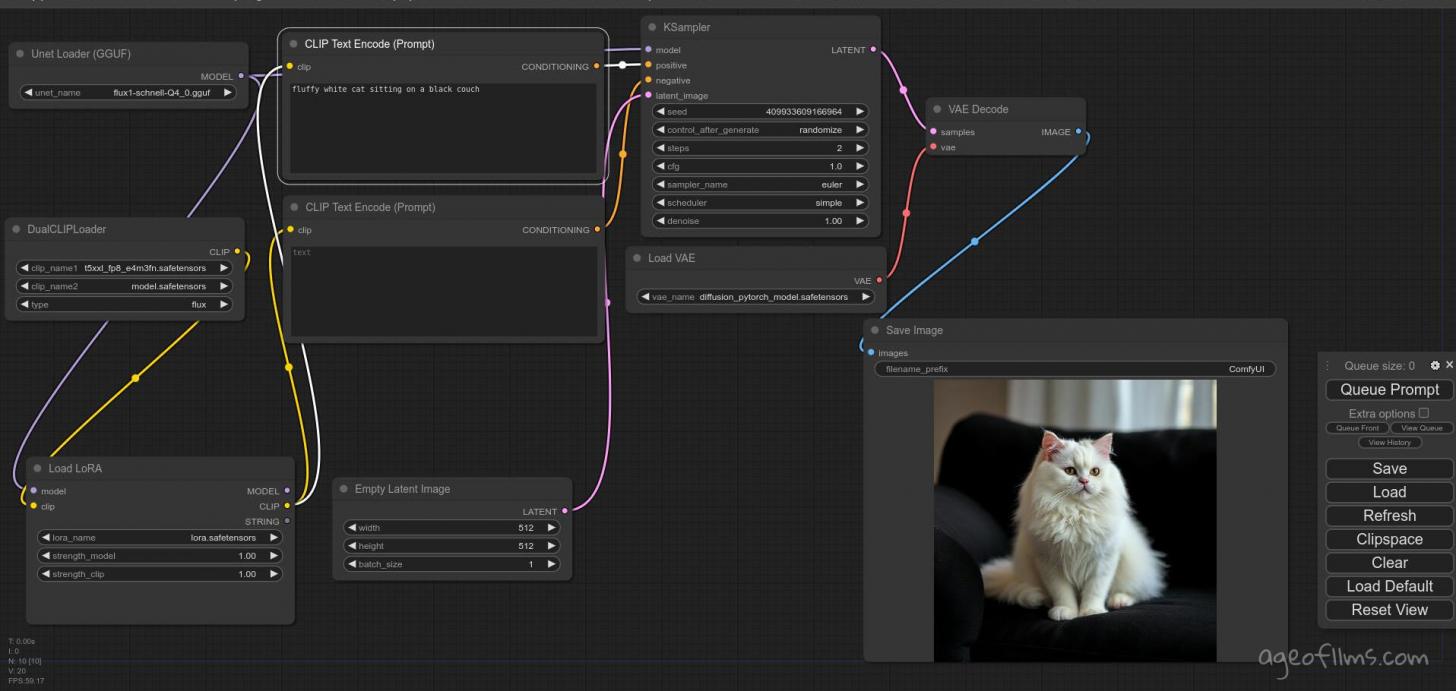

So I grabbed FLUX gguf files here and https://huggingface.co/city96/FLUX.1-schnell-gguf/tree/main I've picked flux1-schnell-Q4_0.gguf which works quite well while still being under 7 GB.

Flux Schnell with Realism Lora + Q4_0.gguf 4 steps That's after initially going with the smallest flux1-schnell-Q2_K.gguf which rendered a very noisy image, despite me using many more steps with it.

Flux Schnell with Realism Lora + Q2_0.gguf 4 steps So don't bother getting that one. Probably larger ones will work even better.

Once you got everything in place, if you're on CPU-only system, you need to start your Comfy UI with this command:

python main.py --cpuThat little operator does the trick and tells the program to stop looking for a GPU and gets rid of those errors you're going to get if you're just going to call '

python main.py'

That's all there is to it. Queue your first prompt and 2 hours later - voila!

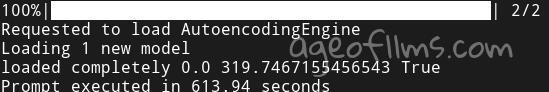

Just kidding, it 'only' taken 10 minutes to complete a 514 px image with 2 steps. (This is Intel Core i5 processor with only 8GB of RAM, folks!)

Actually, just checked for this last image and it took just over 10 minutes (613 seconds).

Man, that reminds me of dial-up modems days!

3 steps gives a much better quality but even longer wait:

Shouldn't take more than a minute or so on a decent GPU system I'm guessing especially if you're on Linux which consumes a small fraction of what Windows casually eats away.

So there you have a showcase of FLUX on GPU-less system. Hopefully, you don't have to use one like this :)

Published: Aug 25, 2024 at 3:07 PM

Related Posts

FLUX Selfie Prompts

4 Sep 2024