Live Portrait AI: Facial Expressions Wizzard

Live Portrait AI is an advanced tool that brings static images to life with incredibly realistic facial expressions and movements. This groundbreaking technology can transfer emotions and expressions from a reference video to a still image, creating animations that are almost indistinguishable from real videos. LivePortrait AI was developed by Kwai Technology, also known as Kuaishou Technology. Kwai is the same company that created another AI video tool called Kling.

Live Portrait technology also exists inside the ComfyUI framework. This integration is achieved through custom nodes created by developers like Kijai.

Live Portrait AI Key Features

- Free and open-source

- Realistic facial expression transfer

- Compatible with various image styles (realistic, cartoon, oil paintings, etc.)

- No need for high-end GPU

How to Access and Use Live Portrait AI

There are three main ways to access this tool online for free:

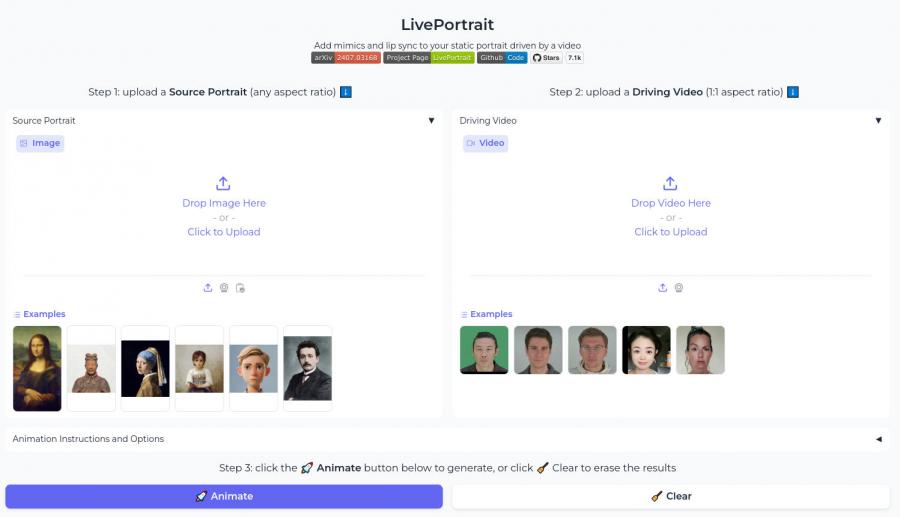

- Hugging Face: A simple interface where you upload your source image and a driving video

- Replicate: Another online platform offering the Live Portrait AI tool

- Google Colab: A notebook that allows you to run the tool without local installation

Step-by-Step Guide

- Choose one of the online methods (e.g., Hugging Face)

- Upload your source image

- Select or upload a driving video with desired expressions

- Click the "Animate" button

- Wait for the AI to process and generate the output video

Live Portrait can handle artsy and cartoonish representations of human faces. In practice, it will sometimes animate human-like monsters, surreal humanoid creatures, but the results tend to be underwhelming, or maybe it was just my luck.

Works best with clearly outlined, evenly lit faces. Will sometimes struggle with cinametic lighting or low quality images with poor resolution.

Distance matters: too close can sometimes lead to failing to detect a face. Usually best to include some shoulders, or upload a loose close-up.

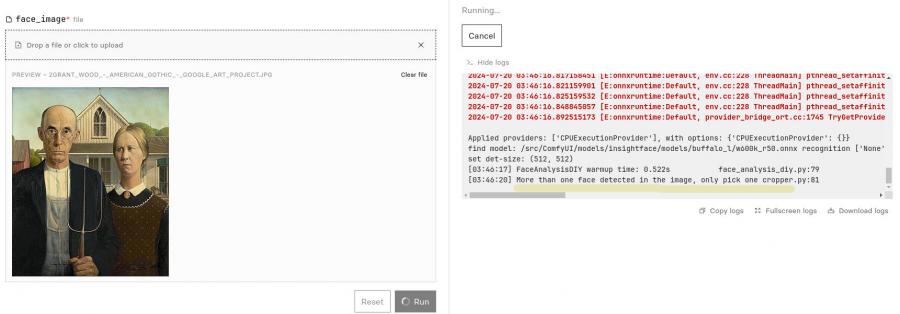

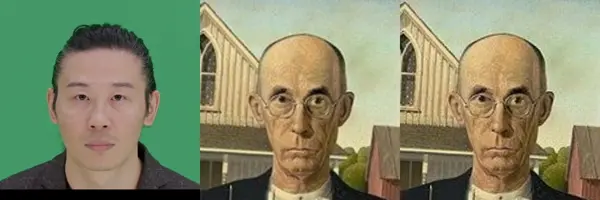

The model can detect multiple faces, and is claimed to have the ability to animate several persons at once, but online versions so far are limited to one face only. Perhaps on local installations with some settings modification it can do both 'Ma and Pa' from "American Gothic':

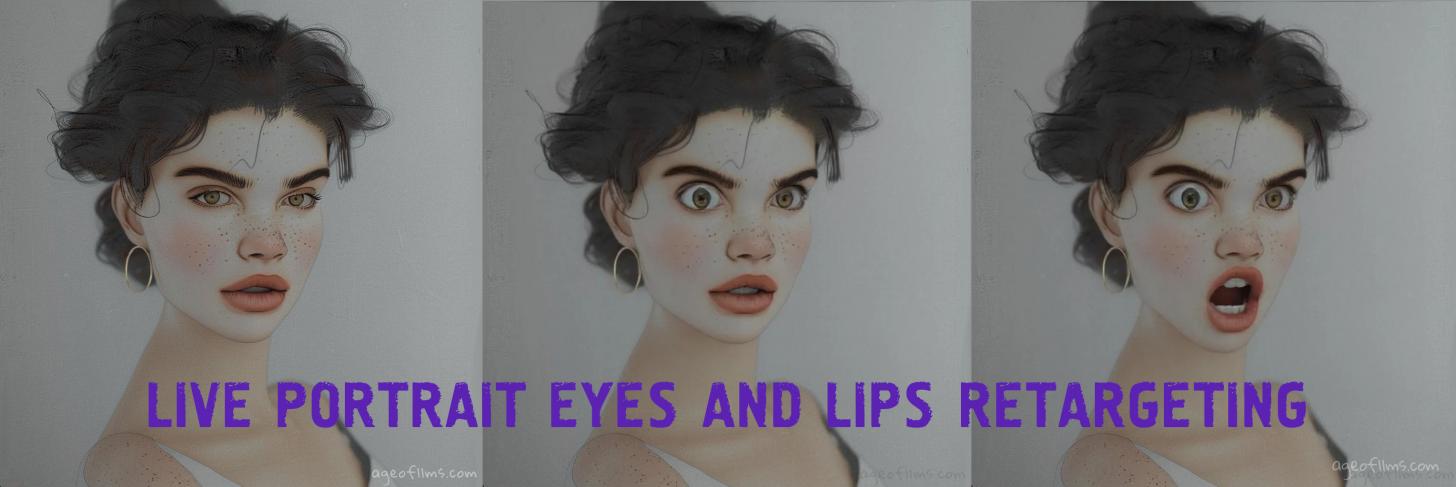

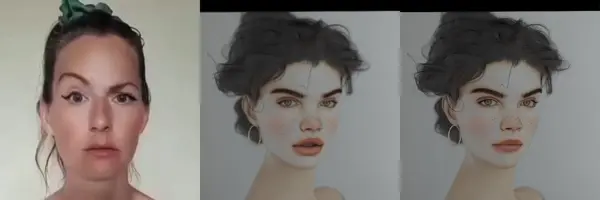

Another use of live portrait model is eyes and lips retargeting. Retargeting is a technique in the LivePortrait framework to animate static portrait images by controlling the movement of eyes and lips independently. On the same Hugging Face page, just below the video section, there is retargeting option which will allow you to clos-open eyes and lips of your chosen portrait.

Applications and Implications

Live Portrait AI is clearly a major leap forward in image-to-video animation technology. Its ability to create lifelike expressions and movements from static images opens up new creative possibilities for both amateur and professional content creators.

Live Portrait AI has significant potential in various fields:

- AI filmmaking

- Personalized video messages

- Digital content creation

While the technology offers exciting possibilities, like many other newest tech advancements, it has a potential for misuse, such as creating deepfakes for malicious purposes. This raises ethical issues around consent, privacy, and the authenticity of digital content.

My Live Portraits

I went over to Hugging Face and tried animating the portraits from doodles I've generated earlier. It's pretty amazing, all right!

So this one I've just tested it using Replicate's interface with some of my previously generated Midjourney headshots:

Short Live Portrait Test

Here is the generated video with facial expressions of my two static headshot pictures.

Published: Jul 21, 2024 at 3:43 AM