EchoMimicV2: Simplified Audio-Driven Half-Body Animation

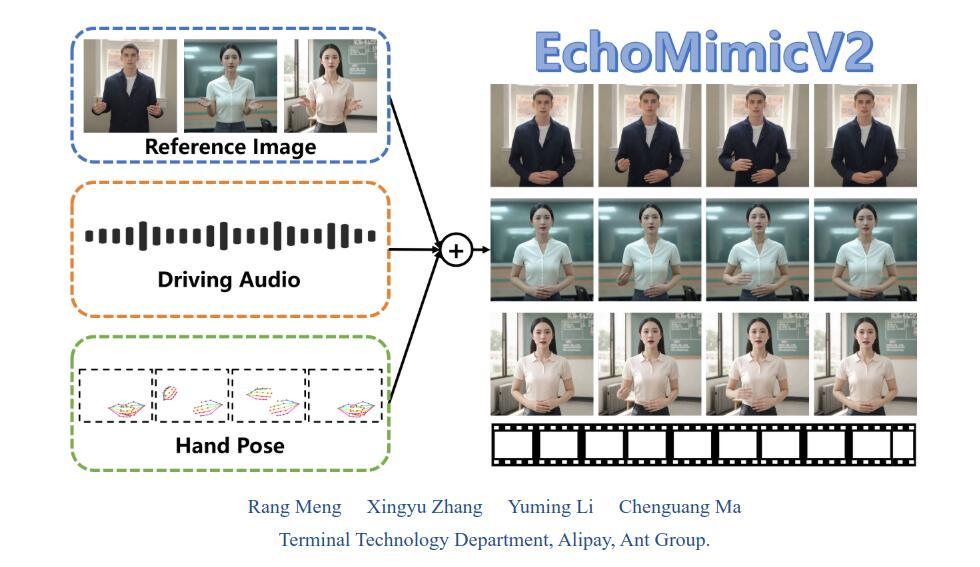

EchoMimicV2 generates high-quality half-body animation videos by syncing a reference image, an audio clip, and hand poses. The tool ensures that movements match the audio seamlessly, going beyond traditional animation methods focused solely on head motion.

Traditional human animation tools often require multiple conditions, complex setups, or work only for headshots. EchoMimicV2 simplifies the process using its Audio-Pose Dynamic Harmonization (APDH) strategy, which combines Pose Sampling and Audio Diffusion. These methods improve details, facial expressions, and gestures while reducing setup complexity.

The tool also uses Head Partial Attention (HPA) to adapt headshot data for training, bypassing the need for full-body datasets during inference. Additionally, its Phase-specific Denoising Loss (PhD Loss) enhances motion, details, and overall animation quality at different stages of processing.

Easy-to-Use, Free, and Open Source

EchoMimicV2 is accessible under the Apache-2.0 license, and its code and datasets are available for the community.

The tool has minimal system requirements and is compatible with GPUs like A100(80G) / RTX4090D (24G) / V100(16G). You’ll need a CUDA-enabled GPU with version 11.7 or higher.There’s also a quantized version for those with 12 GB of VRAM, which should still work.

You can find installation instructions and the Hugging Face model on its GitHub page.

Installation and Setup Highlights

- Clone the GitHub repository and install dependencies using Python 3.10 and Conda.

- Download pre-trained weights and configure required paths for components like FFmpeg.

- Launch the Gradio-based interface for a user-friendly animation experience.

What Sets It Apart?

EchoMimicV2 outperforms existing tools with its smooth, natural animations and precise lip-syncing in multiple languages. It adapts to various accents, syncs body movements with speech, and even manages gestures based on context. For instance, it can detect audio cues and align hand gestures, like forming a prayer pose during spiritual discussions.

Try It Out:

Upload an image, provide an audio clip, and tweak settings like resolution and sampling rates. In just a few steps, generate animations that look and feel real. Whether you're testing a single frame or an extended sequence, EchoMimicV2 ensures consistent results.

Limitations and Future Goals

- Predefined Hand Poses: EchoMimicV2 relies on user-provided hand poses, limiting automation. Future updates aim to generate hand movements directly from audio.

- Limited Full-Body Support: While robust with cropped half-body images, its performance drops with uncropped or full-body images.

EchoMimicV2 sets a new standard for animation by simplifying processes while delivering exceptional quality. By integrating simplified conditions with advanced features like APDH and HPA, it enables fluid, expressive animations that were previously difficult to achieve. Open-source availability ensures that anyone can harness this innovative technology for personal or professional use.

Published: Nov 27, 2024 at 7:39 PM