AI Risk Repository

As I'm reading the news about the group of researchers at the Israeli cybersecurity firm convincing government AI to teach them how to make a bomb, there's simultaneously a piece in another paper about the launch of the AI Risk Repository.

The AI Risk Repository is a big database to track and analyze AI risks. It's a first attempt to rigorously curate, analyze, and extract AI risk frameworks into a publicly accessible, comprehensive, extensible, and categorized risk database. It has three parts:

- AI Risk Database: Over 700 risks from 43 existing frameworks, with quotes and page numbers.

- Causal Taxonomy of AI Risks: How, when, why.

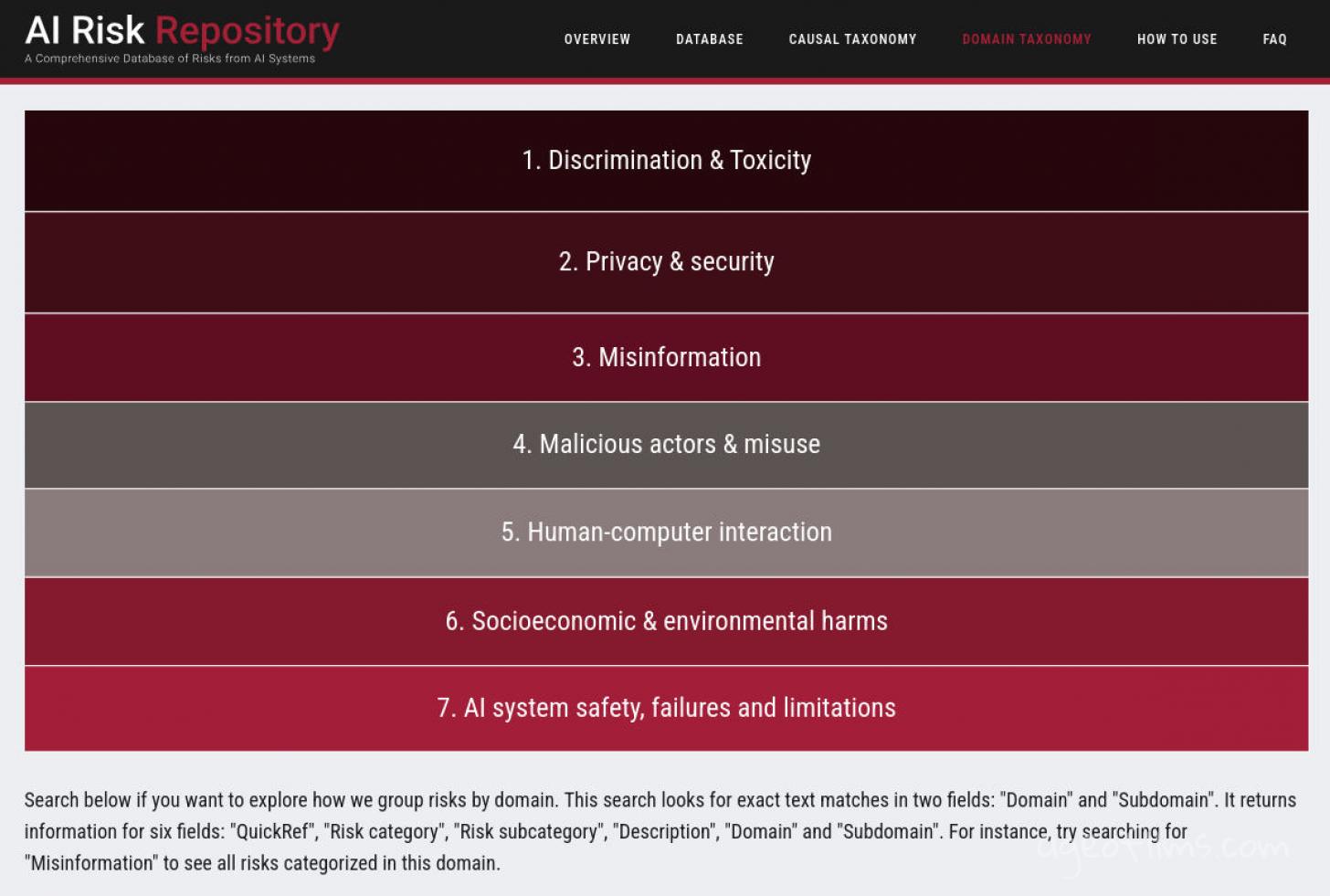

- Domain Taxonomy of AI Risks: Seven domains, like "Misinformation", 23 subdomains, like "False or Misleading Information".

The database has three parts: the database itself, a high-level causal taxonomy, and a mid-level domain taxonomy. Each risk entry has various details: paper title, author, risk category, and a brief description of the risks.

The Purpose for AI Risk Repository

Making laws for AI, like the EU AI Act or California’s SB 1047, has been tough. There’s no agreement on which risks to address. To help policymakers and industry stakeholders, MIT researchers created the AI Risk Repository—a simple, categorized database of AI risks. Peter Slattery, leading the project, said many existing frameworks miss key risks which will impact AI development and regulation.

“This is an attempt to rigorously curate and analyze AI risks into a publicly accessible, comprehensive, extensible and categorized risk database that anyone can copy and use, and that will be kept up to date over time.” “We created it now because we needed it for our project, and had realized that many others needed it, too.”

The repository categorizes risks by causal factors, domains, and subdomains to show gaps in existing frameworks. For example, while over 70% of frameworks discussed privacy and security, only 44% discussed misinformation. Slattery says this will save time for researchers and give a foundation for understanding AI risks.

Implementation and Future Plans

The MIT team worked with various institutions to go through the academic literature and build this big database. They hope having a more complete picture will lead to better regulation and oversight of AI systems. Next they will use the repository to see how well different AI risks are being managed and to identify gaps in responses.

Sources

https://techcrunch.com/2024/08/14/mit-researchers-release-a-repository-of-ai-risks/

Published: Aug 15, 2024 at 6:56 PM